Reverse engineering Typeless.app with lightweight static analysis — no decompiler, no disassembler, just asar extract, grep, strings, and nm.

Introduction

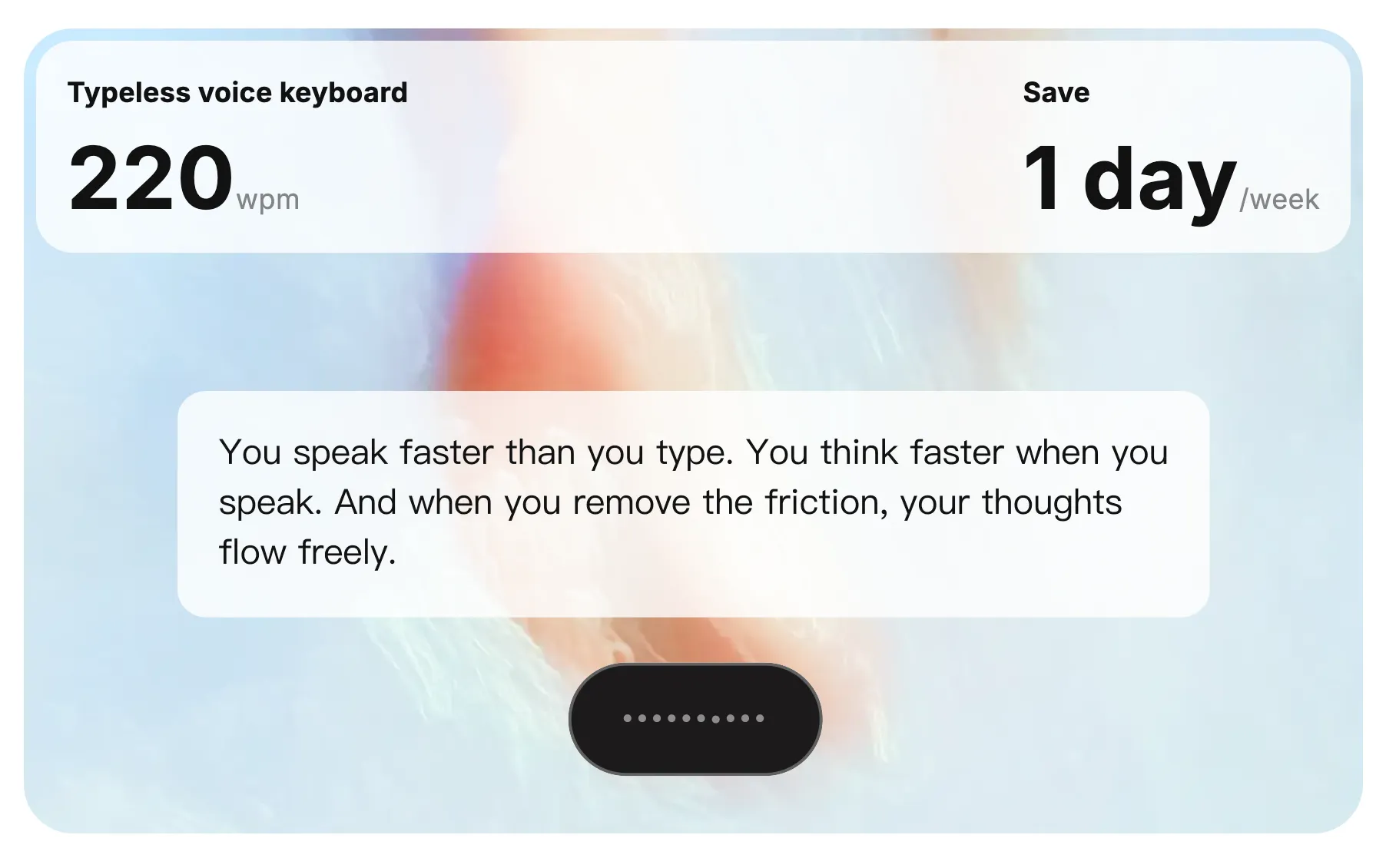

Typeless is a voice-first productivity tool that transcribes speech and types it directly into any application — not just a browser tab, but Slack, VS Code, Figma, or any macOS text field. It hooks into macOS at the system level through five custom Swift dynamic libraries, uses the Accessibility API to read and write text in other apps, and runs an Opus audio compression pipeline before sending audio to a cloud AI backend.

I spent an evening reverse engineering Typeless v1.0.2 (build 83, Electron 33.4.11) on macOS using only standard CLI tools. The design decisions are driven entirely by the core problem: Typeless is a voice tool that needs audio processing, keystroke interception, and text insertion into arbitrary applications.

The Three-Layer Process Model

LAYER 1: RENDERER LAYER 2: MAIN PROCESS LAYER 3: SWIFT NATIVE

(4 Chromium Windows) (Node.js) (5 dylib + 1 binary)

┌─────────────────────┐ ┌──────────────────────┐ ┌─────────────────────┐

│ React 18 │ │ Drizzle ORM + libSQL │ │ libContextHelper │

│ MUI (Material UI) │ │ koffi (FFI bridge) │ │ → AXUIElement │

│ Recoil │ IPC │ electron-store │ FFI │ → getFocusedApp │

│ ECharts │◄────►│ electron-updater │◄────►│ libInputHelper │

│ i18next (58 langs) │ │ node-schedule │ koffi │ → insertText │

│ Framer Motion │ │ Sentry │ │ → simulatePaste │

│ Floating UI │ │ Opus Worker Pool │ │ libKeyboardHelper │

│ diff │ │ Mixpanel │ │ → CGEventTap │

│ Shiki │ │ undici │ │ libUtilHelper │

└─────────────────────┘ └──────────────────────┘ │ → audio devices │

⬇ ⬇ │ libopusenc │

10.6 MB JS bundle 254 KB main process │ → WAV→OGG/Opus │

57 KB CSS 3.5 KB Opus worker └─────────────────────┘

65 lazy chunks SQLite via libSQL 5 universal dylibs

4 HTML entry points 3 electron-store files (x86_64 + arm64)The native layer isn’t a CLI binary — it’s five dynamically loaded Swift libraries called via koffi, a Node.js FFI (Foreign Function Interface) library. This means the main process can call Swift functions synchronously from JavaScript without spawning child processes.

Core Design 1: The Multi-Window Architecture

Most Electron apps have one window. Typeless has four, each serving a distinct interaction model:

| Window | HTML Entry | Purpose | Behavior |

|---|---|---|---|

| Hub | hub.html | Main dashboard, history, settings | Standard app window |

| Floating Bar | floating-bar.html | Always-on-top recording indicator | Transparent, pointer-events: none, click-through |

| Sidebar | sidebar.html | Docked to screen edge, pinned panel | Always-on-top, 600×700, snaps to left screen |

| Onboarding | onboarding.html | First-run setup flow | Allows Microsoft Clarity scripts |

The floating bar is the most interesting one architecturally. It’s a transparent overlay window that floats above all other apps while recording. The CSS explicitly sets pointer-events: none and background: transparent — this means mouse events pass through to the app underneath. When the user hovers over the bar itself, the main process toggles setIgnoreMouseEvents(false) to make it clickable, then re-enables click-through when the mouse leaves:

// Main process IPC handler

case "mouse-enter":

floatingBarWindow.setIgnoreMouseEvents(false);

break;

case "mouse-leave":

floatingBarWindow.setIgnoreMouseEvents(true, { forward: true });

break;This is how Typeless stays visible while you work in other apps without interfering with your workflow. The sidebar does something similar — it docks to the leftmost screen edge and clamps its vertical position so it can’t be dragged off-screen.

There’s also a media app detection system: when Apple Music, TV, or iTunes are in the foreground, the floating bar and sidebar automatically hide to avoid interfering with media playback. They reappear when you switch back to a regular app.

Core Design 2: The Swift FFI Bridge

The most architecturally significant decision is using koffi — a Node.js FFI library — to call Swift code directly from the main process. This avoids the overhead of spawning child processes or using N-API native addons.

Five Swift dylibs are loaded at runtime:

Each dylib is a universal binary (x86_64 + arm64), compiled from Swift source. Here’s what strings and nm reveal about each:

libContextHelper — The App Awareness Layer

This library answers the question: “What app is the user working in, and what text is visible?”

Exported functions (from nm -g):

getFocusedAppInfo()→ returns JSON:{ appName, bundleId, webURL, windowTitle }getFocusedElementInfo()→ AXUIElement properties of the focused text fieldgetFocusedVisibleText(maxLength, timeout)→ visible text content near cursorgetFocusedElementRelatedContent(before, after, timeout)→ text before/after cursorsetFocusedWindowEnhancedUserInterface()→ enables enhanced AX for target app

The key detail: all functions have both sync and async variants (e.g., getFocusedAppInfoAsync). The async versions take a C callback pointer registered via koffi.register(). This matters because Accessibility API calls can block — querying a frozen app could hang the main process. The async variants run on a separate thread with a 500ms timeout, falling back to an empty result if the target app doesn’t respond.

libInputHelper — The Text Insertion Engine

This is where transcribed text gets typed into the target app. Four insertion strategies:

insertText(text)— Direct AXUIElement text insertion via Accessibility APIinsertRichText(html, text)— HTML-aware insertion for rich text fieldssimulatePasteCommand()— Simulates ⌘V by generating CGEvents for the V keyperformTextInsertion(text)/performRichTextInsertion(html, text)— Higher-level wrappers

The paste simulation is a fallback for apps that don’t support direct AX text insertion. The library saves the current clipboard contents (savePasteboard()), replaces them with the transcribed text, simulates ⌘V, then restores the original clipboard (restorePasteboard()). This is why Typeless works with apps that have non-standard text fields — it falls back to “paste” when direct insertion fails.

libKeyboardHelper — Global Keyboard Hooks

Uses CGEventTap to intercept keyboard events system-wide. The strings output reveals error handling for a critical macOS issue:

CGEvent Tap disabled by user input!

CGEvent Tap disabled by timeout! This is the root cause of keyboard monitoring failure!macOS automatically disables CGEventTaps if the tap callback takes too long to process events, as a safety mechanism to prevent system-wide input lag. The library includes a watcher timer to detect when this happens and restart the tap. The main process polls accessibility permission status every 2 seconds and restarts the keyboard listener if it was re-granted.

libUtilHelper — System Integration

Handles audio device enumeration, system mute/unmute, microphone latency testing, lid open/close detection (for laptop microphone behavior), and device fingerprinting. The testAudioLatency function measures the round-trip time from recording to playback — critical for calibrating the voice input experience.

Core Design 3: The Audio Pipeline

The voice-to-text pipeline is the core architecture of the app. Here’s how audio flows from microphone to text insertion:

Key details of this pipeline:

1. Audio is captured in the renderer using the Web Audio API, not a native module. The renderer records WAV buffers and sends them to the main process via IPC.

2. Opus compression happens in a Worker Pool. The main process maintains a pool of up to 2 Node.js worker threads (opusWorker.js). Each worker loads the libopusenc_unified_macos.dylib via koffi and calls opus_convert_advanced() — a C function that converts WAV to OGG/Opus at 16kbps with 20ms frame size, VBR enabled, voice signal type. This off-main-thread design prevents audio encoding from blocking the UI.

3. The compressed audio is sent as a FormData POST to api.typeless.com/ai/voice_flow with extensive context:

audio_file: compressed OGG/Opus file

audio_id: unique recording identifier

mode: "transcript" | "ask_anything" | "translation"

audio_context: JSON with text_insertion_point, cursor_state

audio_metadata: audio_duration, format info

parameters: { selected_text, output_language }

device_name: microphone hardware label

user_over_time: usage duration metric4. The response contains the refined text and optionally delivery instructions, web_metadata, or external_action — indicating the server can direct the app to perform actions beyond text insertion (like opening a URL or executing a command).

5. Text insertion uses Swift FFI — the keyboard:type-transcript IPC handler calls Ae.insertText(transcript) which routes through the koffi-bound libInputHelper.

Core Design 4: Context-Aware Transcription

Typeless doesn’t just transcribe audio — it sends the full application context to the server so the AI can produce contextually appropriate text. This context gathering is what makes Accessibility permission essential.

How macOS Accessibility Text Capture Works

The macOS Accessibility API (AXUIElement) is the mechanism Typeless uses to read text from other applications. Here’s the OS-level flow:

- The app calls

AXUIElementCreateSystemWide()to get a system-wide accessibility handle - It queries

kAXFocusedUIElementAttributeto find the currently focused text field in any app - From that element, it reads

kAXValueAttribute(full text content),kAXSelectedTextAttribute, andkAXVisibleCharacterRangeAttribute

The critical detail: kAXValueAttribute returns the entire text content of a field — a 100K-word document returns all 100K words. There is no OS-level truncation. macOS’s only protection is a single binary gate: the user either grants Accessibility permission to the app, or doesn’t. There is no per-app or per-field granularity — once granted, Typeless can read text from any application.

The Three Context Data Sources

On every recording, Typeless gathers context from three distinct sources via its native Swift libraries:

| Data Source | What It Captures | API Used |

|---|---|---|

| Visible screen content | Up to 10K tokens of text visible on screen | getFocusedVisibleTextAsync(10000, {timeout: 500}) |

| Surrounding context | 1K tokens before + 1K tokens after the input area | getFocusedElementRelatedContentAsync(1000, 1000, {timeout: 500}) |

| Cursor state | Text before/after cursor, selected text | getCurrentInputState() via AXUIElement + AXTextMarkerRange |

The audio_context JSON

Here’s what the assembled context looks like in practice (reconstructed from field names in the extracted source):

{

"active_application": {

"app_name": "Slack",

"app_identifier": "com.tinyspeck.slackmacgap",

"window_title": "#engineering - Acme Corp",

"app_type": "native_app",

"app_metadata": { "process_id": 1234, "app_path": "/Applications/Slack.app" },

"visible_screen_content": "Alice: Can you look into the CI failure?\nBob: On it.\nYou: Let me check the deployment status for "

},

"text_insertion_point": {

"input_area_type": "plain_text",

"accessibility_role": "AXTextArea",

"input_capabilities": { "is_editable": true, "supports_markdown": false },

"cursor_state": {

"cursor_position": 42,

"has_text_selected": false,

"selected_text": "",

"text_before_cursor": "Let me check the deployment status for ",

"text_after_cursor": ""

},

"surrounding_context": {

"text_before_input_area": "Channel: #engineering",

"text_after_input_area": ""

}

},

"context_metadata": {

"is_own_application": false,

"capture_timestamp": "2025-02-16T00:00:00.000Z"

}

}This context serves two purposes:

- Better transcription — knowing you’re in a code editor vs. Slack helps the AI format the output appropriately

- App-specific behavior — the server returns

external_actionorweb_metadatafor certain apps, enabling actions like opening URLs

Core Design 5: The Privacy Architecture

Typeless requires macOS Accessibility permission — the most privileged user-space permission the OS grants. With it, the app can read text from any application, intercept keystrokes system-wide, and insert text into arbitrary text fields. Combined with full network access via a Node.js runtime, this creates a unique privacy surface that deserves its own architectural analysis.

What Leaves Your Machine

For non-blacklisted apps, the data sent on every recording includes:

- Audio — your voice, compressed as OGG/Opus

- Visible screen content — up to 10K tokens of text near the cursor (via

getFocusedVisibleTextAsync) - Cursor context — 1K tokens before cursor + 1K tokens after cursor (via

getFocusedElementRelatedContentAsync) - Selected text — up to 5K tokens (default) of highlighted text

- App metadata — bundle ID, app name, window title, web URL, window position

- Input field properties — AX role, editability, DOM identifiers (for web apps)

There is no user-facing toggle to disable context capture while keeping voice transcription. It’s all or nothing.

The Blacklist: Configuration and Purpose

The blacklist is Typeless’s mechanism for marking certain apps and URLs as “sensitive.” When an app is blacklisted, Typeless will not read its visible screen content or surrounding text — only minimal cursor-level state is still collected.

app_blacklist: {

macos: {

exact: ["com.sublimetext.4", "com.tencent.xinWeChat",

"com.microsoft.Excel", "com.kingsoft.wpsoffice.mac",

"dev.zed.Zed"]

}

}

url_blacklist: {

prefix: ["https://docs.google.com/document/d",

"https://docs.qq.com/doc/",

"https://docs.qq.com/sheet/"]

}

app_whitelist: {

macos: {

exact: ["com.todesktop.230313mzl4w4u92", "com.tinyspeck.slackmacgap",

"com.apple.mail", "com.figma.Desktop", "com.openai.atlas"]

}

}This configuration is hardcoded as a default and also fetched from the server (POST /app/get_blacklist_domain, encrypted with CryptoJS AES) and cached for 24 hours. If the server returns a newer version, it overrides the local defaults.

The Blacklist-Before-Read Design

The most important privacy design decision in the codebase: the blacklist check happens before the AX text read, not after. The three data sources from Core Design 4 have different blacklist behaviors:

| Data Source | Blacklist Gated? |

|---|---|

| Visible screen content | Yes — AX API never called for blacklisted apps |

| Surrounding context | Yes — AX API never called for blacklisted apps |

| Cursor state | No — always collected, no blacklist check |

Here’s the concrete code flow. For visible screen content:

// Step 1: Get lightweight app metadata (bundleId, appName) — no text read yet

const appInfo = getFocusedAppInfo();

// Step 2: Check blacklist BEFORE calling AX API

const appConfig = await getAppConfig(appInfo.bundleId);

const urlConfig = await getUrlConfig(appInfo.webURL);

// Step 3: Only read visible text if NOT blacklisted

if ((appConfig.isWhitelist || !appConfig.isBlacklist) &&

(urlConfig.isWhitelist || !urlConfig.isBlacklist)) {

visibleText = await getFocusedVisibleTextAsync(10000, {timeout: 500});

}

// Blacklisted → visibleText stays undefined → AX API never calledThe same guard protects surrounding context:

if (element.editable && relatedContentParams && !isSelfApp) {

const appConfig = await getAppConfig(appInfo.bundleId);

const urlConfig = await getUrlConfig(appInfo.webURL);

if ((appConfig.isWhitelist || !appConfig.isBlacklist) &&

(urlConfig.isWhitelist || !urlConfig.isBlacklist)) {

relatedContent = await getFocusedElementRelatedContentAsync(1000, 1000, {timeout: 500});

}

}

// Blacklisted → relatedContent stays empty → AX API never calledBut cursor state has no blacklist check:

// getCurrentInputState is called regardless of blacklist

if (inputStateParams && !isSelfApp) {

cursorState = getCurrentInputState(...inputStateParams);

}

// This collects: text_before_cursor, text_after_cursor, selected_textDespite its name, getCurrentInputState() is not a “keyboard helper” — it’s a full Accessibility API call. The strings output from libInputHelper.dylib reveals it uses AXFocusedUIElement, AXSelectedTextRange, and AXStringForTextMarkerRange — the same AX API family as the blacklist-protected functions, just without the blacklist gate.

Excel vs Slack: The Blacklist in Practice

To make this concrete, here’s what happens when you record in Microsoft Excel (blacklisted: com.microsoft.Excel) vs Slack (whitelisted: com.tinyspeck.slackmacgap):

Excel (blacklisted) — what gets sent:

{

"active_application": {

"app_name": "Microsoft Excel",

"app_identifier": "com.microsoft.Excel",

"visible_screen_content": undefined // ← AX API never called

},

"text_insertion_point": {

"cursor_state": {

"text_before_cursor": "Q3 Revenue: $", // ← still collected via AX API (no blacklist check)

"text_after_cursor": "",

"selected_text": ""

},

"surrounding_context": {

"text_before_input_area": "", // ← AX API never called

"text_after_input_area": ""

}

}

}Slack (whitelisted) — what gets sent:

{

"active_application": {

"app_name": "Slack",

"app_identifier": "com.tinyspeck.slackmacgap",

"visible_screen_content": "Alice: Can you check the deployment?\nBob: On it.\nYou: Let me ..." // ← up to 10K tokens

},

"text_insertion_point": {

"cursor_state": {

"text_before_cursor": "Let me check the deployment status for ",

"text_after_cursor": "",

"selected_text": ""

},

"surrounding_context": {

"text_before_input_area": "Channel: #engineering", // ← up to 1K tokens

"text_after_input_area": ""

}

}

}The blacklist effectively blocks the two largest data sources (visible_screen_content and surrounding_context), which together can contain up to 12K tokens. But cursor_state — which uses the same AX API under the hood — is always collected regardless of blacklist status. The scope of cursor_state is limited to the focused text field (e.g., the current cell in Excel, the message input in Slack), not the entire screen. But in apps with large text fields (code editors, document editors), the “text before cursor” could contain significant content.

Additional Privacy Guards

Notion hardcoded exception. Even if Notion is not blacklisted, when the AX role is AXWebArea (the entire page rather than a specific text field), all cursor context is cleared:

if (appInfo.isWebBrowser &&

appInfo.webURL?.startsWith("https://www.notion.so/") &&

element.role === "AXWebArea") {

cursorState.startIndex = 0;

cursorState.endIndex = 0;

cursorState.beforeText = "";

cursorState.afterText = "";

}Design Tradeoffs

- The blacklist is server-controlled, not user-controlled. Typeless decides which apps are “sensitive,” and this can change with any server update. Users cannot add their own apps to the blacklist from the UI.

- The default coverage is narrow. Only ~5 apps and ~3 URL patterns are blacklisted. Common sensitive contexts — password managers, banking websites, medical portals, private Slack DMs — are not excluded by default.

- The guard is well-placed. The check-before-read pattern means the blacklist is a genuine privacy boundary, not just a transmission filter.

Core Design 6: The Data Model

Typeless uses Drizzle ORM with libSQL (Turso’s SQLite fork) for local storage — a more modern stack than raw SQLite. The schema is defined in the main process JavaScript:

The schema reveals a fascinating design choice: heavy denormalization. The focused_app_name, focused_app_bundle_id, focused_app_window_title, focused_app_window_web_title, focused_app_window_web_domain, and focused_app_window_web_url columns duplicate data from the focused_app JSON column. This exists to support the six database indexes:

idx_history_user_created_at

idx_history_user_status_created_at

idx_history_user_app_name_status_created_at

idx_history_user_app_bundle_id_status_created_at

idx_history_user_app_name_web_domain_status_created_at

idx_history_user_app_bundle_id_web_domain_status_created_atThese indexes enable fast filtering by app, by website domain, by status — all queries needed for the history view in the Hub. Without denormalization, every query would require JSON extraction on the focused_app blob, which SQLite handles poorly for indexed lookups.

The three electron-store JSON files provide fast key-value access for settings that don’t need SQL queries:

| Store | Keys | Purpose |

|---|---|---|

app-onboarding | isCompleted, onboardingStep | Onboarding progress |

app-settings | keyboardShortcut, selectedLanguages, selectedMicrophoneDevice, autoSelectLanguages, enabledOpusCompression, dynamicMicrophoneDegradationEnabled, preferredBuiltInMicId, translationModeTargetLanguageCode, microphoneDevices, preferredLanguage | User preferences |

app-storage | runtime data | Ephemeral state |

Core Design 7: Module Boundaries Revealed by 77 IPC Methods

Typeless uses a centralized IPC handler registry with 77 methods. What’s interesting is what the namespace prefixes reveal about how the codebase is organized into modules. Each namespace:method prefix groups related functionality behind a clear boundary.

Typeless’s 77 handlers decompose into 13 IPC modules:

| Module | Methods | Core Responsibility |

|---|---|---|

| audio | 11 | The core module — voice capture, Opus compression, AI voice flow, abort control. Owns the entire pipeline from microphone to refined text. |

| db | 15 | Persistence — CRUD for transcription history, audio blob storage, cleanup policies. The history table is the central entity. |

| page | 14 | Presentation — window lifecycle (open/close/minimize), routing, devtools, onboarding flow. No business logic, pure UI orchestration. |

| permission | 5 | Platform integration — accessibility, microphone, screen capture permissions. Bridges macOS security model to app state. |

| keyboard | 4 | Input handling — start/stop keyboard monitoring, type transcript into target app, watcher interval tuning. Owns the CGEventTap lifecycle. |

| user | 4 | Identity — login, logout, session state. Thin wrapper around token-based auth with api.typeless.com. |

| updater | 4 | Delivery — check, download, install. Manages the idle→checking→downloading→downloaded→installed state machine. |

| store | 1 | Configuration — single generic handler that dispatches to 3 electron-store files. Hides which store holds which key from the renderer. |

| file | 5 | Filesystem — manages the Recordings/ directory on disk (open, read size, save logs, clear). Separate from db because it owns filesystem, not SQLite. |

| i18n | 3 | Localization — get/set/reset language across 58 locales. |

| device | 1 | Hardware — is-lid-open — laptop lid detection for microphone behavior. |

| microphone | 1 | Audio hardware — delay-test — latency measurement via Swift testAudioLatency. |

| context | 1 | App awareness — get-app-icon — extracts icon from target app’s Info.plist. |

The namespace convention (audio:mute, db:history-upsert, page:open-hub) serves as a contract surface between the renderer and main process. Each prefix groups a cohesive set of operations that could, in theory, be extracted into a separate module without touching the others. The audio module depends on db (to fetch the WAV blob before compression) and keyboard (to insert the result), but page depends on nothing — it’s pure window management.

One design detail worth noting: store:use is a single handler that accepts { store, action, key, value } and dispatches to one of three electron-store files. This hides the storage topology from the renderer — it doesn’t need to know that settings, onboarding state, and runtime storage live in separate files. It just calls store:use with a store name.

Core Design 8: Three Voice Modes, One Pipeline

Typeless doesn’t have three features — it has one audio pipeline with a polymorphic mode parameter. The same microphone capture, the same Opus compression, the same /ai/voice_flow endpoint, but the mode field changes the server’s behavior entirely:

| Mode | API Value | Trigger | Server Behavior |

|---|---|---|---|

| Voice Transcript | transcript | Hold Fn (push-to-talk) or Fn+Space (hands-free) | Transcribe speech → refine text → insert into focused app |

| Voice Command | ask_anything | Separate shortcut | Transcribe speech → interpret as instruction → return external_action (open URL, execute command) |

| Voice Translation | translation | Fn+Shift | Transcribe speech → translate to output_language → insert translated text |

This is the Strategy pattern at the API boundary. The client doesn’t implement three different pipelines — it sends different mode + parameters payloads and the server returns different response shapes:

- Transcript mode: response has

refine_text(the cleaned-up transcription to insert) - Command mode: response has

external_actionandweb_metadata(the app should do something, not just type) - Translation mode: request includes

output_languagefrommode_meta; response hasrefine_textin the target language

The command mode is architecturally different from the other two — it reads selected_text from the cursor state (via audio_context.text_insertion_point.cursor_state.selected_text) and uses it as context for the AI instruction. This means “ask anything” operates on what you’ve highlighted, while transcript and translation operate on what you’ve spoken.

The shortcut system itself is configurable and stored in electron-store with full key mapping for every physical key on the keyboard — 100+ entries including numpad, function keys, and international keys like Eisu and Kana for Japanese keyboard layouts. This is necessary because the keyboard hooks operate at the CGEventTap level (raw key codes), not at the character level.

The Complete Tech Stack

Layer 1: Renderer (10.6 MB JS + 57 KB CSS + 65 lazy chunks)

| Library | Evidence | Purpose |

|---|---|---|

| React 18 | 170+ refs across bundles | UI framework |

| MUI (Material UI) | 640+ refs (Select, Drawer, Chip, Tabs, Dialog, etc.) | Component library |

| Recoil | 152 refs | State management |

| i18next | 15 refs in shared bundle | Internationalization (58 locales) |

| ECharts | 29 refs + echarts-for-react | Usage analytics charts |

| Framer Motion | 36 refs | Animations and transitions |

| Floating UI | @floating-ui/react in deps | Tooltip/popover positioning |

| Shiki | 5 refs | Syntax highlighting |

| Sentry | 107 refs | Error tracking (browser SDK) |

| Mixpanel | 5 refs | Product analytics |

| Microsoft Clarity | 16 refs (onboarding CSP allows clarity.ms) | Session replay / heatmaps |

| diff | dependency | Text difference computation |

| Markdown (remark + rehype + marked) | 30+ refs | Markdown rendering |

| Jotai | 3 refs | Lightweight atom state (possibly for sidebar) |

| Immer | 10 refs | Immutable state updates |

| @tanstack/react-virtual | dependency | Virtualized list rendering for history |

| notistack | dependency | Snackbar notifications |

| compare-versions | dependency | Version comparison for updates |

Layer 2: Main Process (254 KB, Node.js, Electron 33.4.11)

| Package | Purpose |

|---|---|

drizzle-orm v0.44.2 | Type-safe ORM for SQLite schema and queries |

@libsql/client v0.15.9 | Turso’s libSQL driver (SQLite fork with extensions) |

sqlite3 v5.1.7 | Fallback/legacy SQLite driver |

koffi v2.11.0 | FFI bridge to call Swift dylibs from JavaScript |

electron-store v10.0.1 | JSON file persistence for settings (3 stores) |

electron-updater v6.3.9 | Auto-update via typeless-static.com/desktop-release/ |

@sentry/electron v7.5.0 | Error tracking and performance monitoring |

node-schedule v2.1.1 | Scheduled tasks (history/disk cleanup) |

undici v7.16.0 | HTTP client for API calls |

crypto-js v4.2.0 | Encryption (audio context fingerprinting) |

dotenv v16.5.0 | Environment configuration |

plist v3.1.0 | Parse macOS Info.plist files (for app icons) |

js-yaml v4.1.0 | YAML parsing (app-update.yml config) |

diff v8.0.2 | Text diff computation |

Layer 3: Swift Native (5 dylibs + 1 binary, all universal x86_64 + arm64)

| Library | Functions | Technology |

|---|---|---|

| libContextHelper | getFocusedAppInfo, getFocusedElementInfo, getFocusedVisibleText, getFocusedElementRelatedContent, setFocusedWindowEnhancedUserInterface | macOS Accessibility API (AXUIElement) |

| libInputHelper | insertText, insertRichText, deleteBackward, getSelectedText, simulatePasteCommand, savePasteboard, restorePasteboard, findKeyCodeForCharacter, getCurrentInputState | CGEvent, NSPasteboard, AX text insertion |

| libKeyboardHelper | KeyboardMonitor.startMonitor, stopMonitor, processEvents, ShortcutDetector, KeyboardUtils | CGEventTap, key code mapping |

| libUtilHelper | getAudioDevicesJSON, muteAudio, unmuteAudio, isAudioMuted, testAudioLatency, deviceIsLidOpen, checkAccessibilityPermission, getDeviceId, launchApplicationByName | CoreAudio, IOKit, AVAudioInputNode |

| libopusenc | opus_convert_advanced, ope_encoder_* | libopus + libopusenc (C library) |

| macosCheckAccessibility | Single-purpose binary | Checks AX permission from child process |

Appendix: Extraction Methodology

Every finding comes from read-only static analysis:

| Step | Command | What it reveals |

|---|---|---|

| 1 | cat Info.plist | Bundle ID (now.typeless.desktop), version 1.0.2, build 83 |

| 2 | cat app-update.yml | Update feed: typeless-static.com/desktop-release/, arm64 channel |

| 3 | ls Contents/Frameworks/ | Electron + Squirrel + Mantle + ReactiveObjC |

| 4 | npx @electron/asar extract app.asar /tmp/out | Full Node.js source, renderer bundles |

| 5 | cat package.json | 21 direct dependencies, Vite build, Node ≥22 |

| 6 | find /tmp/out/dist -type f | 4 HTML entries, 65 JS chunks, 4 MJS entry points |

| 7 | grep -oE '"[a-z]+:[a-z][-a-zA-Z_]*"' main.js | 77 IPC channel names |

| 8 | find app.asar.unpacked -name "*.node" | 3 native addons: @libsql, koffi, sqlite3 |

| 9 | find Contents/Resources/lib -name "*.dylib" | 5 Swift dylibs |

| 10 | file *.dylib | Universal binaries (x86_64 + arm64) |

| 11 | strings libContextHelper.dylib | AXUIElement, getFocusedAppInfo, Accessibility API |

| 12 | nm -g libInputHelper.dylib | insertText, simulatePasteCommand, savePasteboard |

| 13 | strings libopusenc.dylib | grep Users/ | Build path reveals monorepo name and developer |

| 14 | grep -oi 'react|mui|recoil' *.js | Library identification by string frequency |

| 15 | grep -oE 'T\.handle\("[^"]*"' main.js | Complete IPC handler registry |

The Swift dylibs embed function signatures for panic messages. Minified JavaScript retains library identifiers. Electron apps ship package.json unencrypted. None of this requires a decompiler.

Analysis performed on Typeless v1.0.2, build 1.0.2.83, Electron 33.4.11 (Chrome 130.0.6723.191), macOS 15.6, Apple M4.

I’ve been using Typeless, a Voice keyboard that makes you smarter. Use my link to join and get a $5 credit for Typeless Pro: https://www.typeless.com/refer?code=ZEDSNDM