Reverse engineering Codex.app with lightweight static analysis — no decompiler, no disassembler, just asar extract, grep, and strings.

Introduction

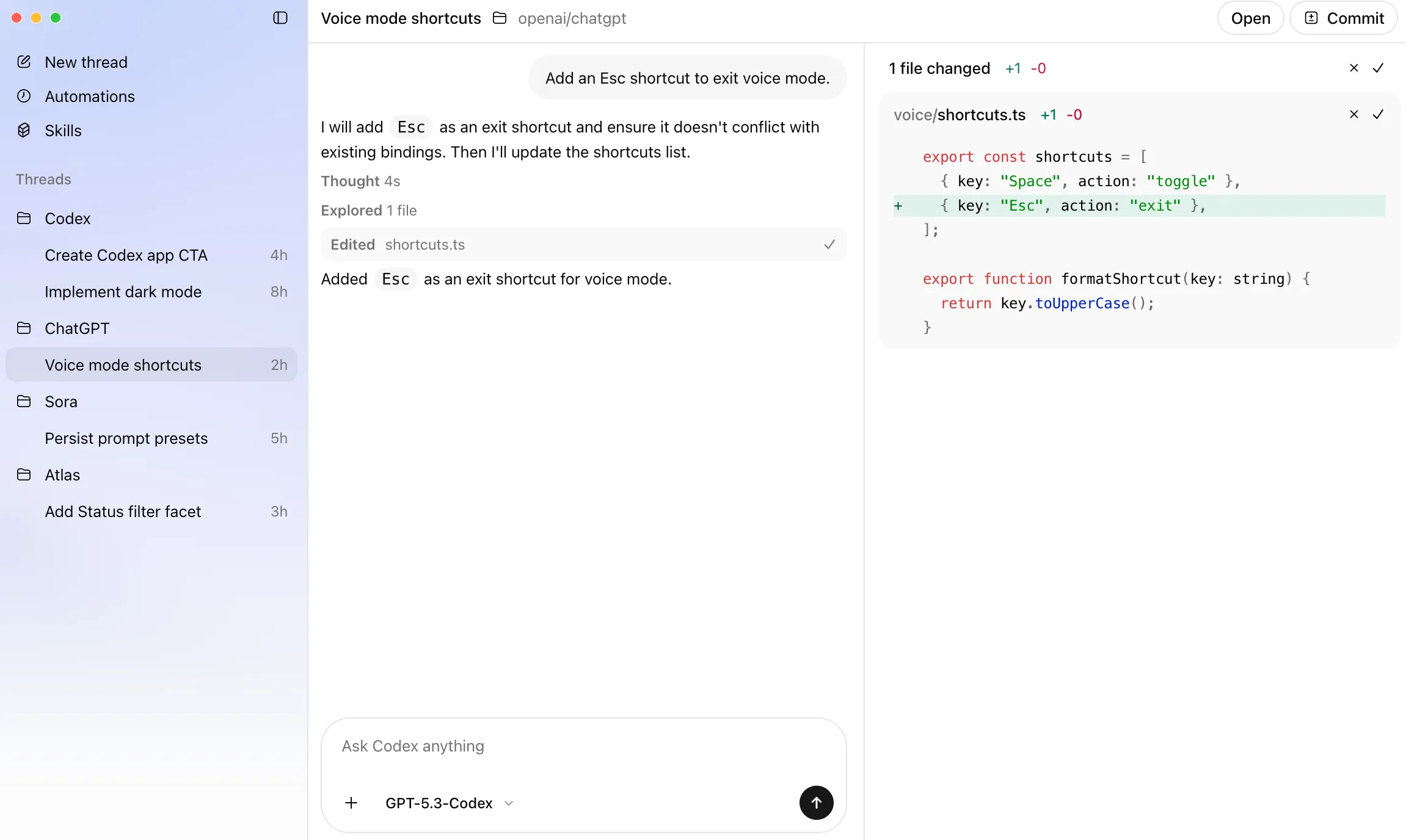

OpenAI shipped Codex as a standalone desktop application — not a VS Code extension, not a web app. I spent an afternoon reverse engineering its architecture from Codex v26.212.1823 (build 661, Electron 40.0.0) on macOS, using only standard CLI tools.

What I found isn’t just a chat UI bolted onto an API. It’s a full-featured development platform with a 70-method IPC API surface, a transparent auth proxy, a git-native workspace model, and a built-in automation/cron system — all coordinated across three process layers.

The Three-Layer Process Model

LAYER 1: RENDERER LAYER 2: MAIN PROCESS LAYER 3: RUST CLI

(Chromium Webview) (Node.js) (codex binary)

┌─────────────────────┐ ┌─────────────────────┐ ┌─────────────────────┐

│ React 18 │ │ better-sqlite3 │ │ tree-sitter │

│ ProseMirror │ │ node-pty │ │ starlark │

│ Radix UI │ IPC │ WebSocket client │ stdio │ rmcp (MCP) │

│ Shiki │◄────►│ Sparkle updater │◄────►│ sqlx-sqlite │

│ cmdk │ │ Sentry │ WS │ oauth2 + keyring │

│ Framer Motion │ │ Immer + Zod │ │ tokio runtime │

│ D3 / Mermaid │ │ mime-types │ │ reqwest + hyper │

│ KaTeX / Cytoscape │ │ shlex │ │ OpenTelemetry │

└─────────────────────┘ └─────────────────────┘ └─────────────────────┘

⬇ ⬇ ⬇

6.5 MB JS bundle SQLite threads DB 208 Rust crates

300 KB CSS File-based sessions Mach-O arm64

433 lazy chunks PTY shell sessions OpenAI API callsThis is a three-process architecture, but the interesting part isn’t the layers — it’s the boundaries between them and the design decisions at each boundary.

Core Design 1: CLI-as-Backend

The most important architectural decision is that the desktop app doesn’t contain a custom Rust backend — it wraps the same codex CLI available via Homebrew:

codex --version

# → codex-cli 0.98.0

file $(which codex)

# → Mach-O 64-bit executable arm64The Electron app launches it with:

codex app-server --port <websocket-port>The binary has 208 Rust crate dependencies (full list below). Two SQLite databases exist — better-sqlite3 (synchronous) in the main process for UI state, and sqlx-sqlite (async) in the Rust binary for conversation data. This avoids cross-process database locking. Here’s the complete schema extracted from both databases:

The schema reveals two distinct domains: the Rust binary owns conversation data (threads, thread_memory, thread_dynamic_tools, logs), while the Node.js main process owns UI and scheduling state (automations, automation_runs, inbox_items, global_state). The automation_runs.thread_id bridges the two — when an automation runs, it creates a thread in the Rust database and records the reference in the Node.js database.

This design means the desktop app and the terminal CLI share the exact same core — improvements to one benefit both. The Electron layer adds windowing, a ProseMirror editor, and OAuth2 authentication, but the intelligence lives in Rust.

Core Design 2: The IPC Handler Registry

Electron has two processes: the renderer (browser, runs React UI) and the main process (Node.js, has full OS access). They can’t call each other’s functions directly — they communicate through IPC (Inter-Process Communication), like two microservices talking over a message bus.

Most Electron apps implement IPC ad-hoc — ipcMain.handle('do-something', ...) scattered across files. Codex takes a different approach: a centralized handler registry — a single object mapping 70 method names to async handler functions:

// Simplified from the actual extracted code:

handlers = {

"git-push": async ({ branch, force }) => { ... },

"automation-create": async ({ name, prompt, rrule }) => { ... },

"read-file": async ({ path }) => { ... },

"account-info": async () => { ... },

// ... 66 more methods

}This is essentially a typed RPC server inside the main process. The renderer calls it like:

// Renderer side — feels like calling a REST API

const result = await ipcRenderer.invoke("git-push", { branch: "main", force: false });

const info = await ipcRenderer.invoke("account-info");

const file = await ipcRenderer.invoke("read-file", { path: "/src/index.ts" });The 70 methods break down into six domains:

| Domain | Methods | Examples |

|---|---|---|

| Git & PR | 14 | push, branch, merge-base, worktree snapshot, gh pr create |

| Automation | 11 | CRUD automations, run-now, archive, inbox |

| File & Environment | 12 | read/pick files, config resolution, agents.md |

| Workspace | 8 | multi-root management, pinned threads, title generation |

| Skills | 3 | discover, install, remove |

| System | 22 | auth, state, config, telemetry, editor launch |

Why this design matters:

- The renderer has zero business logic — it doesn’t know how git works, where files are, or how auth tokens refresh. It just calls named methods and renders results. This is the same separation you’d see between a mobile app and its backend API.

- Every handler gets an

originparameter — identifying which window made the request. This enables multi-window support and per-window state. - The registry is the single integration point — the VS Code compatibility bridge (

vscode://codex/protocol) routes into the same handlers, meaning the webview can run in both Electron and VS Code without code changes.

Core Design 3: The Fetch Proxy Auth Gateway

The renderer makes HTTP requests via a custom fetch proxy in the main process. Instead of calling fetch() directly (which Electron’s security model restricts), the renderer sends structured messages over IPC:

Renderer: { type: "fetch-request", url: "/backend-api/...", method: "POST", body: "..." }

↓ IPC

Main Process: intercepts, attaches auth headers, calls electron.net.fetch()

↓

Main Process: { type: "fetch-response", status: 200, body: "..." }

↓ IPC

Renderer: receives responseThe proxy does several critical things:

-

Auto-attaches auth — if the target is

*.openai.comor*.chatgpt.com, the proxy injectsAuthorization: Bearer <token>andChatGPT-Account-Idheaders automatically. The renderer never sees raw auth tokens. -

Token refresh — on 401 responses, the proxy calls

getAuthToken({ refreshToken: true })and retries once. This is completely transparent to the renderer. -

VS Code protocol bridge — URLs starting with

vscode://codex/are intercepted and routed to the handler registry instead of the network. This means the renderer can call internal APIs using the samefetch()pattern as external APIs. -

Relative URL resolution — bare paths like

/backend-api/conversationare resolved againstCODEX_API_BASE_URL(production:chatgpt.com, development:localhost:8000).

This pattern is similar to how mobile apps handle authentication — the network layer is completely abstracted. The renderer is a “dumb” client that doesn’t know how authentication works.

Core Design 4: Git as the Source of Truth

Most AI coding tools treat the filesystem as the context boundary — “open a folder, that’s your project.” Codex goes a level deeper: git is the context boundary, not the filesystem.

This is a fundamental design choice that shapes everything else:

The problem it solves: An AI coding agent needs to understand what it’s working on. “A folder” is ambiguous — is this the repo root? A subdirectory? A monorepo package? Are there files the agent should ignore? What’s changed? What’s the baseline to diff against? A naive agent answers none of these questions. Codex answers all of them by anchoring to git.

How it works concretely:

When you point Codex at a subdirectory, it doesn’t just open that folder — it resolves the full git context:

This means the agent knows:

- Which files are tracked, modified, or untracked (via

git status) - What the baseline is for any diff (via

git-merge-base) - Which files to ignore (via

.gitignore, powered by theignorecrate) - How to create branches, snapshot the working tree, and push changes

Why this matters for an AI agent:

-

Safe rollback — if the agent makes bad changes, git provides the undo. The

apply-patchhandler in the IPC registry can apply or revert patches atomically. -

Snapshot-based cloud execution —

prepare-worktree-snapshottarballs the working tree andupload-worktree-snapshotsends it to OpenAI’s infrastructure. This is how Codex runs tasks in the cloud — it doesn’t sync files one by one, it snapshots the git state. This only works because git gives you a clean boundary of “what is this project.” -

PR-native workflow — the agent can execute a full development cycle end-to-end:

The full GitHub CLI integration means Codex understands not just the code, but the development workflow around it.

The configuration layer built on top:

Codex uses .codex/environments/*.toml for per-project configuration, resolved hierarchically — closest to the working directory wins:

These configs use Starlark (Google’s deterministic Python subset from Bazel) — not for scripting, but because Starlark guarantees no I/O, no imports, no system calls. You can write conditional config logic that’s mathematically safe to evaluate on any machine. This is the kind of decision that only makes sense when you realize the agent will be evaluating untrusted configs from any repository it opens.

Core Design 5: The Automation Engine

Codex has a full cron/automation system built into the desktop app — not a server-side feature. The SQLite schema reveals:

CREATE TABLE IF NOT EXISTS automations (

id TEXT PRIMARY KEY,

name TEXT NOT NULL,

prompt TEXT NOT NULL,

status TEXT NOT NULL DEFAULT 'ACTIVE',

next_run_at INTEGER,

last_run_at INTEGER,

cwds TEXT NOT NULL DEFAULT '[]',

rrule TEXT -- RFC 5545 recurrence rule

);

CREATE TABLE IF NOT EXISTS automation_runs (

thread_id TEXT PRIMARY KEY,

automation_id TEXT NOT NULL,

status TEXT NOT NULL,

read_at INTEGER,

thread_title TEXT,

source_cwd TEXT,

inbox_title TEXT,

inbox_summary TEXT

);

CREATE TABLE IF NOT EXISTS inbox_items (

id TEXT PRIMARY KEY,

title TEXT

);Key observations:

- RRule scheduling — uses RFC 5545 recurrence rules (the same format as iCal), with 62 references to

rrulein the codebase. This is full-featured scheduling, not just simple intervals. - Per-workspace CWDs — each automation stores a list of working directories, so the same prompt can run against multiple repositories.

- Inbox model — automation runs produce inbox items with titles and summaries. There’s an archive/delete flow for managing completed runs.

- Thread integration — each automation run creates a conversation thread (

thread_id), so you can review what the agent did.

The automation lifecycle:

This means Codex can run background coding tasks on a schedule — “every morning, review open PRs in this repo” or “every Friday, update dependencies” — entirely from the desktop, with no server-side scheduler.

Core Design 6: The Editor Integration Layer

Codex supports opening files in 16 different editors:

vscode, vscodeInsiders, cursor, zed, sublimeText, bbedit,

textmate, windsurf, antigravity, xcode, androidStudio,

intellij, goland, rustrover, pycharm, webstormThe open-file handler implements per-editor launch logic with line/column positioning, preferred editor persistence per workspace, and smart fallback (if the file is a binary format like PDF, it falls back to the system file manager regardless of preferred editor).

More interesting is the VS Code protocol compatibility. The main process implements handlers that mirror VS Code’s extension API surface:

// URLs starting with vscode://codex/ are intercepted

const ik = "vscode://codex/";

// Routed to the same handler registry as IPC calls

handleVSCodeRequest(origin, method, params)This strongly suggests the Codex webview was originally designed to run inside VS Code as well as standalone Electron. The abstraction layer lets the same renderer code work in both contexts.

How Authentication Actually Works

The auth system is more sophisticated than “store an API key”:

Key details:

- JWT structure — the token payload contains

https://api.openai.com/auth(account ID, user ID, plan type) andhttps://api.openai.com/profile(email). The main process parses these for theaccount-infohandler. - Keychain storage — the Rust binary stores credentials in the macOS keychain via the

keyringcrate, not in config files or Electron’ssafeStorage. - OAuth2 local callback — the

tiny_httpcrate spins up a temporary local HTTP server to receive the OAuth2 redirect. Theoauth2crate manages the full PKCE flow. - Domain allowlist — auth headers are only attached for

localhost,*.openai.com, and*.chatgpt.com. Theab.chatgpt.comdomain (Statsig A/B testing) is explicitly excluded.

The Complete Tech Stack

Layer 1: Renderer (6.5 MB JS + 300 KB CSS + 433 lazy chunks)

The notable choice is ProseMirror for the editor — not Monaco (code editor) or a plain textarea. ProseMirror’s schema system lets them define custom node types for tool calls, file diffs, diagrams, and other structured content inline with text. It’s the same engine behind Notion and the New York Times editor.

| Library | Refs | Purpose |

|---|---|---|

| React | 1,235 | UI framework |

| Zod | 370 | Runtime schema validation (shared with main process) |

| Lottie | 325 | Animated illustrations (loading states, onboarding) |

| unified / remark / rehype | 245 | Markdown parsing pipeline (AST-based) |

| Sentry | 187 | Error tracking and performance monitoring |

| Statsig | 155 | Feature flags and A/B testing |

| sonner | 133 | Toast notifications |

| RRule | 93 | RFC 5545 recurrence rules for automation scheduling |

| xterm.js | 79 | Terminal emulator (with FitAddon, WebLinksAddon) |

| Radix UI | 77 | Headless accessible primitives (Dialog, Tooltip, Select, ContextMenu, etc.) |

| DOMPurify | 57 | HTML sanitization for rendered markdown |

| nanoid / uuid | 59 | Unique ID generation |

| clipboard | 50 | Copy-to-clipboard support |

| Mermaid | 42 | Diagram rendering (flowcharts, sequence, etc.) |

| Framer Motion | 40 | Animations and transitions |

| Immer | 37 | Immutable state management |

| cmdk | 27 | ⌘K command palette |

| ProseMirror | 25 | Rich text document editor (custom node types for tool calls, diffs) |

| KaTeX | 24 | LaTeX math rendering |

| Shiki | 16 | Syntax highlighting (400+ lazy-loaded grammars) |

| emoji | 14 | Emoji picker / rendering |

| @tanstack/react-form | 10 | Form state management |

| D3 | 9 | Data visualization (scales, shapes, selections) |

| Cytoscape | 6 | Graph / network visualization |

| dnd-kit | 5 | Drag-and-drop (sortable lists) |

| micromark | 6 | Lightweight markdown tokenizer |

Layer 2: Main Process (Node.js, Electron 40.0.0)

| Package | Purpose |

|---|---|

better-sqlite3 | Local thread/session storage (synchronous) |

node-pty | Real pseudo-terminal for shell command execution |

ws + bufferutil + utf-8-validate | WebSocket communication with Rust backend |

@sentry/electron + @sentry/node | Crash reporting and error tracking |

immer | Immutable state updates |

lodash + memoizee | Utility functions and memoization |

zod (v4.1) | Runtime schema validation |

smol-toml | TOML config parsing for .codex/ configs |

shlex | Shell command tokenization |

socks-proxy-agent | SOCKS proxy support for enterprise networks |

mime-types + which | File type detection and binary lookup |

Layer 3: Rust CLI (208 crates, Mach-O arm64)

| Category | Crates | Purpose |

|---|---|---|

| Code Intelligence | tree-sitter, tree-sitter-highlight, pulldown-cmark, similar, diffy, ignore | AST parsing, markdown, diff, gitignore-aware traversal |

| Configuration | starlark, starlark_syntax, starlark_map, toml, toml_edit, serde_yaml | Starlark runtime + TOML/YAML config handling |

| Networking | reqwest, hyper, hyper-rustls, eventsource-stream, tiny_http | HTTP client/server, SSE streaming, OAuth callback |

| Async Runtime | tokio, tokio-stream, tokio-util, futures-util, async-channel | Concurrent task execution |

| Protocol | rmcp | Native MCP (Model Context Protocol) client |

| Storage | sqlx-core, sqlx-sqlite | Async SQLite for Rust-side persistence |

| Auth & Security | oauth2, keyring, ring, rustls | OAuth2 PKCE, OS keychain, TLS |

| File System | notify, fsevent-sys, globset | File watching (macOS FSEvents), glob matching |

| Terminal | portable-pty, process-wrap, signal-hook | PTY management, process control, signal handling |

| Media | image, png, tiff, zune-jpeg, fax | Image processing and format support |

| Compression | zip, zstd-safe, bzip2, xz2, flate2 | Archive handling for worktree snapshots |

| Encoding | chardetng, encoding_rs, base64 | Character detection, encoding conversion |

| Observability | sentry, opentelemetry, opentelemetry-otlp, tracing, tracing-subscriber | Error reporting + distributed tracing |

| System | os_info, sys-locale, system-configuration, chrono | Platform introspection |

What Makes This Architecture Work

The six designs above form a coherent system:

- CLI-as-backend means desktop and terminal share the same core — improvements to one benefit both

- The IPC registry creates a clean domain boundary — the renderer is a pure UI layer with zero business logic

- The fetch proxy solves auth transparently — no token management in renderer code, automatic refresh, consistent error handling

- Git as source of truth makes Codex context-aware — it understands your repository structure, not just your file system

- The automation engine turns a chat tool into a development platform — scheduled background agents, inbox for results

- The editor integration layer bridges Codex with 16 IDEs — and the VS Code protocol compatibility hints at a future where the same UI runs in both Electron and VS Code

The key insight: Codex is not a chat app with an API key. It’s a local development platform where the LLM is one component among many — git integration, code intelligence, workspace management, and scheduled automation are equally fundamental.

Appendix: Extraction Methodology

Every finding comes from read-only static analysis. Here’s the toolkit:

| Step | Command | What it reveals |

|---|---|---|

| 1 | cat Info.plist | App version, bundle ID, Electron version, update feed |

| 2 | ls Contents/Frameworks/ | Sparkle, Squirrel, Electron framework versions |

| 3 | npx @electron/asar extract app.asar /tmp/out | Full Node.js source, package.json, webview assets |

| 4 | cat package.json | All dependencies, entry point, build scripts, monorepo structure |

| 5 | find /tmp/out -type d | Directory layout: .vite/, webview/, skills/, native/ |

| 6 | grep -c 'react|radix|cmdk' bundle.js | Library identification by string frequency |

| 7 | file $(which codex) | Binary architecture (Mach-O arm64) |

| 8 | strings codex | grep cargo/registry | 208 Rust crate names from embedded source paths |

| 9 | grep -oE '"[a-z]+-[a-z-]+":\s*async' main.js | 70 IPC handler method names |

Rust binaries embed source paths for panic messages and backtraces. Electron apps ship package.json unencrypted. Minified JavaScript retains library identifiers. None of this requires a decompiler — it’s the natural byproduct of how these tools are built.

Analysis performed on Codex v26.212.1823, build 661, Electron 40.0.0, macOS 15.6, Apple M4.